Breast Center | The Research Team of Prof. Wang Shu Provides a Solution to Streamline Intraoperative Cancer Diagnosis

王殊教授团队智能光学成像研究在权威期刊发表

2024-07-30

The team led by Professor Wang Shu from the Breast Center at Peking University People's Hospital (PKUPH) has published an article, titled "Potential Rapid Intraoperative Cancer Diagnosis Using Dynamic Full-Field Optical Coherence Tomography and Deep Learning: A Prospective Cohort Study in Breast Cancer Patients", in the renowned journal Science Bulletin (IF=18.8). This study has combined dynamic full-field optical coherence tomography (D-FFOCT) with deep learning algorithms to achieve a rapid and intelligent diagnosis. This innovative approach offers a promising solution for optimizing intraoperative decision-making processes. The study lists Dr. Zhang Shuwei from the Breast Center at PKUPH, Dr. Yang Bin from the China ESG Institute at the Capital University of Economics and Business and Chief Surgeon Yang Houpu from the Breast Center at PKUPH as co-first authors. Professor Wang Shu is the primary corresponding author.

Breast cancer is the most common cancer in

women. A critical challenge in its treatment is to preserve

as much normal tissue as possible and to minimize physical harm to patients.

Precise intraoperative diagnosis is crucial in breast cancer surgery. However,

traditional intraoperative assessments based on hematoxylin and eosin (H&E)

histology have been time-consuming and tedious, with specimen-consuming

concerns.

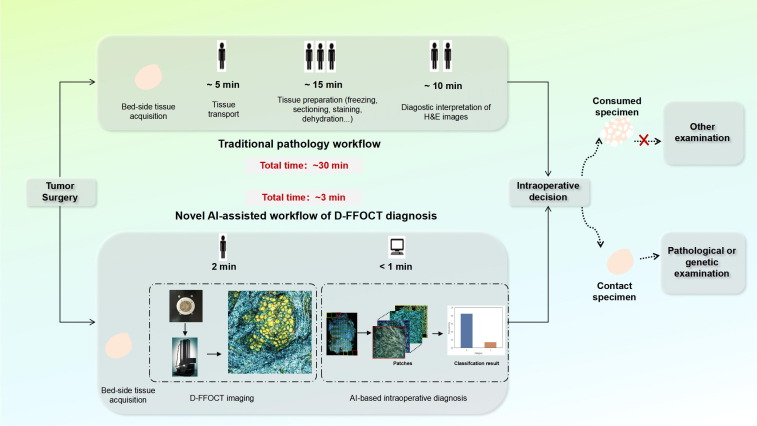

Facing existing flaws in traditional

intraoperative assessments, the team has designed a near-real-time automated

cancer diagnosis workflow that combines D-FFOCT and deep

learning for bedside tumor diagnosis during surgery. D-FFOCT is a label-free

optical imaging technology that allows light interference imaging to capture

intracellular metabolic features, creates internal contrast and provides

high-resolution visualization of live cells to establish a “virtual histology”. However, interpreting

D-FFOCT images and H&E histological images requires trained clinicians. There

is a common shortage of pathology services worldwide, even in some developed countries. To solve this problem, the team built a deep

learning model, Swin Transformer, to fine-tune D-FFOCT

patches. Professor Wang Shu and her team members assessed the diagnostic accuracy

of benign and malignant tumor classification for D-FFOCT slides by using the model and simulated

the evaluation of breast-conserving surgery (BCS) margins. Their prospective cohort trial captured

D-FFOCT images from April 26 to June 20, 2018, encompassing 48 benign lesions,

114 invasive ductal carcinoma (IDC), 10 invasive lobular carcinoma, 4 ductal

carcinoma in situ (DCIS), and 6 rare tumors. The deep learning model yielded

excellent performance, with an accuracy of 97.62%, a sensitivity of 96.88% and a

specificity of 100%; only one IDC was misclassified. Meanwhile, the acquisition

of D-FFOCT images was non-destructive and did not require any tissue preparation

or staining procedures. Furthermore, in the simulated intraoperative margin

evaluation procedure, the time needed for this novel workflow (approximately

3 minutes) was significantly shorter than that needed for traditional

procedures (approximately 30 minutes).

These findings indicate that the

combination of D-FFOCT and deep learning algorithms can streamline

intraoperative cancer diagnosis. It is expected that this novel workflow may enable

an automated intraoperative diagnostic process for breast cancer patients in

the future.

Paper Link: http://www.sciencedirect.com/science/article/pii/S2095927324002172?via%3Dihub